To Take AI to the Next Level, We Need a Dose of Wisdom

I was recently asked by The Hill Times, a Canadian newspaper, if I thought Artificial Intelligence research in Canada is hitting a plateau. My thoughts on that question turned into a short op-ed, published last week. But since that is both paywalled and rather constrained in length by the format, I thought I would elaborate with a slightly longer version here on my blog.

As someone who has moved to Canada fairly recently, I’ve had the privilege of seeing Canadian AI research develop from an outside perspective as well as experiencing the AI landscape from the inside. I’ve also been an AI researcher since before the deep learning boom… and expect to be afterwards as well!

There is no doubt that Canada is a global leader in Artificial Intelligence. Thanks to the foresight of the 2017 pan-Canadian AI strategy, the first of its kind globally, investment has transformed research, enterprise, and the attraction of talent. Yet as talk of another ‘AI Winter’ abounds, will this be sustained?

Canada invested an initial $125 million and allocated a further $443 million in 2021. Post-strategy analyses, such as that by Accenture, highlight the transformative effect that this has had on research, start-ups and enterprise, foreign investment, and the attraction and retention of talent. Centres of global excellence were established, particularly in deep learning. Many of the recent breakthroughs achieved in Canada relating to computer vision, reinforcement learning, and generative models now drive new and exciting technologies around the world. It is uncontroversial to say that in the last decade, Canada has become known as a world-leading beacon of AI research and development.

Yet at the same time, many have started to predict another ‘AI winter’, a reference to the period during the 1970s and 80s when there was minimal interest or funding in AI R&D. I can see why people are asking if Canada can uphold its momentum. Or have we indeed reached a plateau?

To answer this, we should probably understand what such a plateau might look like. And what would climbing higher toward the vision of ’machines that do the sorts of things minds can do’ mean?

Source: https://www.flickr.com/photos/jpto_55/33198964291

We cannot ignore that debate has started to turn. As Gary Marcus recently articulated, ‘deep learning is hitting a wall’. As a tool that essentially recognizes patterns, Marcus argues that there is a limit to which mental processes we are going to be able to simulate with the technique. We may end up looking back and noticing that the result of the first five years of Canada’s AI strategy was, essentially, just picking the next layer of low-hanging fruit.

Another feature of AI based on deep learning is its insatiable need for more data. To even the casual observer of the progress of modern AI technology, it must now seem implausible to conceive of AI tech without big data. The two have become inextricably linked in our minds: more data is better. Is this a problem?

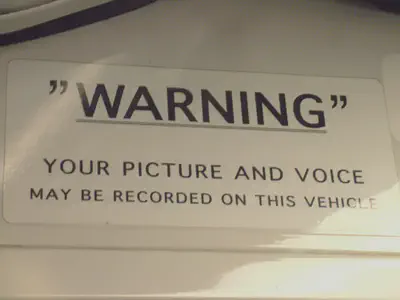

The answer is emphatically yes if we want to challenge the emerging doctrines of surveillance and data linkage. Adopting today’s data-driven AI creates a commercial and government imperative for widespread and high-frequency connected surveillance, which brings with it not just issues of privacy, but also power, agency, and identity, and with which we are only now beginning to grapple.

Regulation is clearly a crucial part of the picture. Ontario’s 2021 consultation on ’trustworthy AI’ established some important priorities. Yet a common feature of such efforts here is the stickiness of trying to define what ‘counts’ as AI. There is a worry that unless this can be narrowed down, almost any automated decision-making or data-driven decision support system could be included, and then the ability to regulate and legislate becomes unwieldy.

Many people tie themselves in knots trying to define ‘intelligence’ (e.g. this makes for interesting reading), hoping that that will lead us to somewhat of a more complete (and, they often say, more helpful) definition of ‘artificial intelligence’. Much of this misses the point, at least when deciding when to delegate to a machine an activity previously done in society by human minds. Once we have taken this step, we have already admitted a certain AI-ness to it.

On the other hand, the Center on Privacy & Technology at Georgetown Law, a thinktank that focuses on disparate impacts of surveillance policy on marginalized people, has made the decision to stop using the terms ‘artificial intelligence’ and ‘machine learning’, in favour of being specific about the technology and who is responsible for it. AI has become, Executive Director Emily Tucker argues, ‘a phrase that now functions in the vernacular primarily to obfuscate, alienate, and glamorize’.

There is no doubt that we ought to engineer machines (of any type) for accountability, or that those who build and operate them are responsible for the machine’s actions. This is not in question, and this is where Tucker is bang on the mark. But we have already reached the point at which neither full control nor understanding can always be assumed. What then?

This relinquishing of control is not simply a bug with AI; for many it is a necessary feature of what an autonomous mind-like machine would require. Perhaps in some situations we need to shift towards thinking about our relationship with someone else’s AI like someone else’s dog: cautious yet holding the owner fully to account if things go wrong. And as Reeves and Nass famously found, people routinely and naturally treat machines like people, whether it’s being polite to a voice assistant or feeling sympathy for robots.

The question runs deeper: when is AI based on deep learning the kind of AI that we want to delegate to? Or is it missing something else profoundly mind-like? As I’ve previously argued, today’s AI technologies contain an unusual imbalance of insight and understanding: new insights arise from the models, while many of the ‘qualities’ that a human mind would have brought are utterly absent. Important aspects of our mental activity are, as yet, nowhere near delegated.

There is an important distinction to make here between AI as a simulated or synthetic mind, and “AI” as speech (note the quote marks), the marketing term so often used to obscure, divert, or confuse. “AI” as marketing motivates the projection of a particular image – an ‘AI janitor’, for example, or ‘AI-enabled workplace surveillance’ in place of a human supervisor. Ironically, when hiring people for cleaning tasks, we might rank trustworthiness above smarts, and it would seem a matter of basic human dignity that your workplace supervisor has the capabilities of empathy and nuance. But it is the use of the term ‘intelligent’ here that is noteworthy. It is an assertion of power that is used to deceive people into accepting some technology on the basis that it is somehow more than it is. And, in these respects, doing so is profoundly wrong.

Thankfully, there is push back against workplace surveillance “AI”: a new law in Ontario would require employers to disclose electronic monitoring of workers; elsewhere, public outcry has led to such technologies being dropped by prominent firms.

Source: https://www.flickr.com/photos/missrogue/94403705/

So, we arrive at the question of trustworthy AI: when might we decide to depend on a mind-like machine in the face of some risk to ourselves? Or perhaps rather sarcastically, trustworthy “AI”: when might we decide to rely on the impression that the tech marketers wish to paint?

When it comes to the latter, we must develop the wisdom to see the technology that is in front of our eyes for what it is. At least we ought to hope that it is not too much to ask: what the system is doing; why it does it; how it does it; and why it does it that way. And in ways that the people affected by it understand. Only then can we start to ask: do we want it?

There are still immense challenges here for Canada and the world to tackle, not least the educational, democratic, and accessibility imperatives around an equitable and empowering access to such an understanding.

So, what ought future Canadian AI strategy strive for? If the answer is more computation, bigger datasets, and better training algorithms, then we will indeed have reached a plateau. The world already knows how to play that game, and “AI” as marketing gives us a clue as to where it goes next. If this form of “AI” as deceptive surveillance is the extent of our modern-day vision of intelligent machines, or indeed something taken as an inevitability, then we will have failed millions of citizens, and especially those at the poor end of structural power relationships.

On the other hand, the worry of an ‘AI Winter’ only exists if we cannot fathom how to see Spring. Perhaps the time has come for a radical rethink of what synthetic minds we want operating within our society. Are these the hyper-rational, data-hungry prediction machines of the late 2010s? Or ought we expect, and challenge the immense talent within Canada and globally, for more?

It has long been a vision of many in AI to conceive of machines capable of a richer version of a mind than that imagined by data-driven problem solving alone; to include reflection, empathy, pause-for-thought, creativity, sociality, nuance, trust, judgement not just prediction. In short, let’s challenge ourselves to imagine machines with a dose of wisdom. And let’s bring a dose of wisdom to how we approach the use of machines in our society, too.